本文部署选用k8s 1.30版本,所有的组件截止2024.08时的最新版本,操作系统选用CentOS7(官方yum源已停止维护),所有组件systemd方式运行管理。

本文所用到软件包可从k8s官方博客已经GitHub上搜索下载。

k8s架构图及组件概念简介

部署前先简单了解k8s架构及组件概念,k8s节点分为Master和Node,Master所需要控制组件,Node需要运行组件,通常Node节点来运行容器。

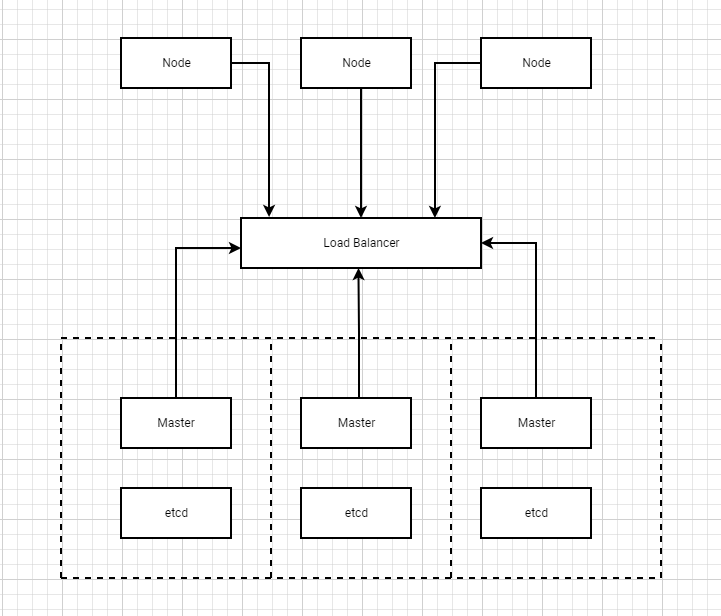

HA集群多master节点架构

Load Balancer(负载均衡器):HAProxy/Nginx + Keepalived。HAProxy负责载均衡,Keepalived负责Master节点健康检查和VIP。

Etcd:保存k8s集群状态存储。是由GO实现的一个高可用的分布式键值(key-value)数据库,主要用于共享配置和服务发现。可单独部署也可与集群一起部署。

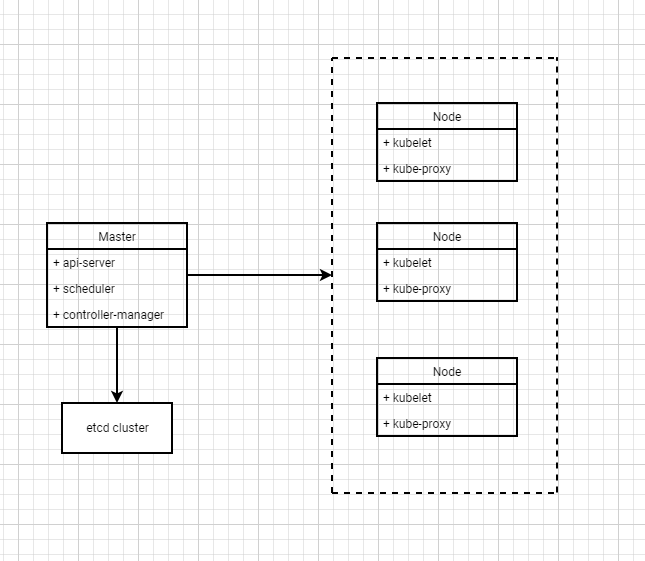

单master节点及组成架构

k8s控制组件

kube-api-server:k8s最核心的组件,与k8s集群交互,用于处理内部和外部请求。网关功能(认证、鉴权、消息转发)、服务注册与发现。

kube-controller-manager:k8s 控制器,控制器负责实际运行集群。实时监控集群中各种资源状态变化。

kube-scheduler:k8s调度器,负责将Pod调度到Node节点上并通知api-server。

etcd:存储配置数据以及有关集群状态。

k8s运行组件

kubelet:保证pod能健康的运行,负责节点上容器相关动作的管理。

kube-proxy:负责处理集群内部或外部的网络通信。是每个节点上运行着的网络代理。提供Node负载均衡,将节点上的流量转发到容器中。

Container Runtime:容器运行时引擎,如dockers、rkt、containerd、CRI-O、runC等。运行的容器在颇多中运行,只能在Node节点上运行。

k8s接口

CRI:容器运行时接口(docker,containerd,cri-o)

CNI:容器网络接口(flannel,calico,cilium)

CSI:容器存储接口(nfs,ceph,gfs,oss,minio)

部署前准备

常见部署方式

Kubeadm:k8s官方提供的一键式部署工具,基于kubeadm可以快速部署k8s集群,但需要手动配置。所有的组件以容器的方式运行。需要服务器联网并能连接Google的镜像仓库。

原生二进制文件:生产首选,从官方下载发行版的二进制包,手动部署每个组件和自签TLS证书,组成K8S集群。部署不需要连接互联网,只需内网互相访问。所有的组件以原生文件运行,需要手动配置。

准备环境要求

- 最低配置:2H2G

- 操作系统:Debian和RedHat的Linux发行版

- 网络:所有服务器能相互连接(公网或内网)

- 集群:所有服务器的主机名、MAC地址都不能重复

- 端口:所有组件的端口放行

- swap:禁用swap(交换分区)

环境架构规划

本文搭建一个master两个node节点,etcd集群与k8s部署在一起,需要把主机名和ip更换对应,如下表所示:

| 节点主机名 | IP | 部署服务 |

| k8s-master | 10.80.99.230 | api-server、controller-manager、scheduler、kubctl、kubelet、kube-proxy、etcd |

| k8s-node1 | 10.80.99.231 | kubelet、kube-proxy、etcd |

| k8s-node2 | 10.80.99.232 | kubelet、kube-proxy、etcd |

在下文部署时需要注意修改ip为你当前规划的ip,表格为对照参考。

k8s正式部署(原生部署)

部署选用k8s 1.30版本,所有的组件截止2024.08时的最新版本,操作系统选用CentOS7(官方yum源已停止维护),所有组件systemd方式运行管理。

环境初始化

在所有Master和Node节点执行,默认root用户执行。

#关闭防火墙

systemctl disable firewalld

systemctl stop firewalld

#关闭selinux服务

sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config

setenforce 0

#清空iptables规则

iptables -F && iptables -X && iptables -Z

iptables -P FORWARD ACCEPT

#配置节点主机名(每台服务器主机名不能重复)

sed -i 's/localhost.localdomain/k8s-master/g' /etc/hostname

#配置主机解析(vim或vi)

vim /etc/hosts

#写入如下内容

10.80.99.230 k8s-master

10.80.99.231 k8s-node1

10.80.99.232 k8s-node1

#关闭swap

swapoff -a

sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

#配置自定义模块

cat <<EOF | tee /etc/modules-load.d/k8s.conf

overlay

br_netfilter

EOF

#配置ipvs流量转发

cat <<EOF | tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

#生效模块

modprobe overlay && modprobe br_netfilter

#添加内核脚本

vim /etc/sysconfig/modules/ipvs.modules

#写入如下内容

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack

#授权755

chmod 755 /etc/sysconfig/modules/ipvs.modules

#运行脚本执行

bash /etc/sysconfig/modules/ipvs.modules

#服务免密配置

ssh-keygen -t ed25519

#分发密钥到所有节点

for i in 231 232 ; do ssh-copy-id [email protected].$i; done

部署containerd

在k8s 1.20版本后,kubelet默认使用containerd作为容器运行时引擎,且docker运行被弃用,所以需要先安装containerd。containterd和runC组成docker运行环境。

containerd软件包清单

所用到软件包可从k8s官方博客已经GitHub上搜索下载。后续补充每个软件包的具体URL。

将软件包上传至master节点/opt/install/containerd路径下。

- containerd-1.7.16-linux-amd64.tar.gz (容器运行时引擎)

- nerdctl-1.7.6-linux-amd64.tar.gz (containerd兼容docker工具)

- runc.amd64 (容器生成运行工具)

- cni-plugins-linux-amd64-v1.5.1.tgz(容器网络接口插件)

部署containerd命令

Master节点分发软件包后在所有节点执行

#从Master分发软件包到各节点

scp /opt/install/containerd/* root@k8s-node1:/opt/install/containerd/

scp /opt/install/containerd/*/ root@k8s-node2:/opt/install/containerd/

#创建安装路径并解压

mkdir -p /opt/install/{k8s,containerd}

tar Cxzvf /usr/local containerd-1.7.16-linux-amd64.tar.gz

#验证版本号是否成功

containerd -v

#创建Systemd Service的配置文件在/usr/local/lib/systemd/system/containerd.service

#containerd.service写入如下

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

[Unit]

Description=containerd container runtime

Documentation=https://containerd.io

After=network.target local-fs.target

[Service]

ExecStartPre=-/sbin/modprobe overlay

ExecStart=/usr/local/bin/containerd

Type=notify

Delegate=yes

KillMode=process

Restart=always

RestartSec=5

# Having non-zero Limit*s causes performance problems due to accounting overhead

# in the kernel. We recommend using cgroups to do container-local accounting.

LimitNPROC=infinity

LimitCORE=infinity

# Comment TasksMax if your systemd version does not supports it.

# Only systemd 226 and above support this version.

TasksMax=infinity

OOMScoreAdjust=-999

[Install]

WantedBy=multi-user.target

#加入systemd自启动

systemctl daemon-reload

systemctl enable --now containerd

#安装runc

install -m 755 runc.amd64 /usr/local/sbin/runc

#验证版本号是否成功

runc -v

#安装cni

mkdir -p /opt/cni/bin

tar Cxzvf /opt/cni/bin cni-plugins-linux-amd64-v1.5.1.tgz

mkdir /etc/containerd

containerd config default > /etc/containerd/config.toml

vim /etc/containerd/config.toml

#写入如下内容(harbor更换为私有仓库地址,也可以不使用)

s/registry.k8s.io\/pause:3.8/harbor\/google_containers\/pause:3.9/g

s/SystemdCgroup = false/SystemdCgroup = true/g

s|config_path = ""|config_path = "/etc/containerd/certs.d"|g

#安装nerctl,使用docker命令

tar -zxvf nerdctl-1.7.6-linux-amd64.tar.gz -C /usr/local/bin

#将nerdctl命令重命名为docker

cat << 'EOF' > /usr/local/bin/docker

#!/bin/bash

/usr/local/bin/nerdctl $@

EOF

#授权

chmod +x /usr/local/bin/docker

部署ETCD证书

SSL/TLS证书软件包清单

将软件包上传至master节点/tmp路径下

- cfssl (下载URL:https://pkg.cfssl.org/R1.2/cfssl_linux-amd64)

- cfssljson (下载URL:https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64)

创建SSL/TLS自签证书

Master节点执行

#删除旧证书

rm -f /tmp/cfssl* && rm -rf /tmp/certs && mkdir -p /tmp/certs

#上传cfssl & cfssljson 至/tmp后授权

chmod +x /tmp/cfssl

mv /tmp/cfssl /usr/local/bin/cfssl

chmod +x /tmp/cfssljson

mv /tmp/cfssljson /usr/local/bin/cfssljson

#验证

/usr/local/bin/cfssl version

/usr/local/bin/cfssljson -h

#创建cfssl源证书目录

mkdir -p /ca/{fssl,etcd}

#生成根ca证书配置文件

vim /ca/fssl/ca-csr.json

#写入如下

{

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"O": "kubernetes",

"OU": "system",

"L": "Your_City",

"ST": "Your_State",

"C": "CN"

}

],

"CN": "kubernetes"

}

#生成根ca证书

cfssl gencert --initca=true /ca/fssl/ca-csr.json | cfssljson --bare /ca/fssl/ca

#验证

openssl x509 -in /ca/fssl/ca.pem -text -noout

#生成config配置文件

vim /ca/fssl/ca-config.json

#写入如下

{

"signing": {

"default": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "87600h"

}

}

}

创建etcd服务自签证书

Master节点执行

vim /ca/fssl/etcd-ca-csr.json

#写入如下

{

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"O": "etcd",

"OU": "system",

"L": "Your_City",

"ST": "Your_State",

"C": "CN"

}

],

"CN": "etcd",

"hosts": [

"127.0.0.1",

"localhost",

"10.80.99.230",

"10.80.99.231",

"10.80.99.232"

]

}

#生成证书

cfssl gencert \

--ca /ca/fssl/ca.pem \

--ca-key /ca/fssl/ca-key.pem \

--config /ca/fssl/ca-config.json \

/ca/fssl/etcd-ca-csr.json | cfssljson --bare /ca/etcd/etcd-ca

#验证

openssl x509 -in /ca/etcd/etcd-ca.pem -text -noout

部署etcd集群服务

etcd软件包清单

将软件包上传至所有节点/opt/install/etcd路径下并创建安装路径(mkdir -p /opt/etcd/{bin,conf})

- etcd-v3.5.15-linux-amd64.tar.gz

Master节点部署

Master节点执行

tar xzvf /opt/install/etcd/etcd-v3.5.15-linux-amd64.tar.gz -C /opt/etcd/bin --strip-components=1

#创建服务启动配置

vim /etc/systemd/system/etcd.service

#写入如下

[Unit]

Description=Etcd Server

Documentation=https://github.com/coreos/etcd

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

Restart=always

RestartSec=5s

LimitNOFILE=40000

TimeoutStartSec=0

ExecStart=/opt/etcd/bin/etcd \

--name etcd-1 \

--data-dir /data/etcd \

--listen-client-urls https://10.80.99.230:2379 \

--advertise-client-urls https://10.80.99.230:2379 \

--listen-peer-urls https://10.80.99.230:2380 \

--initial-advertise-peer-urls https://10.80.99.230:2380 \

--initial-cluster etcd-1=https://10.80.99.230:2380,etcd-2=https://10.80.99.231:2380,etcd-3=https://10.80.99.232:2380 \

--initial-cluster-token etcd-cluster \

--initial-cluster-state new \

--client-cert-auth \

--trusted-ca-file /ca/fssl/ca.pem \

--peer-trusted-ca-file /ca/fssl/ca.pem \

--cert-file /ca/etcd/etcd-ca.pem \

--key-file /ca/etcd/etcd-ca-key.pem \

--peer-cert-file /ca/etcd/etcd-ca.pem \

--peer-key-file /ca/etcd/etcd-ca-key.pem \

--peer-client-cert-auth

[Install]

WantedBy=multi-user.target

#分发到node

scp -r /opt/etcd root@k8s-node1:/opt

scp -r /opt/etcd root@k8s-node2:/opt

scp -r /ca root@k8s-node1:/

scp -r /ca root@k8s-node2:/

Node节点部署

Node节点执行

#创建服务启动配置

vim /etc/systemd/system/etcd.service

#写入如下

[Unit]

Description=Etcd Server

Documentation=https://github.com/coreos/etcd

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

Restart=always

RestartSec=5s

LimitNOFILE=40000

TimeoutStartSec=0

ExecStart=/opt/etcd/bin/etcd \

--name etcd-2 \

--data-dir /data/etcd \

--listen-client-urls https://10.80.99.231:2379 \

--advertise-client-urls https://10.80.99.231:2379 \

--listen-peer-urls https://10.80.99.231:2380 \

--initial-advertise-peer-urls https://10.80.99.231:2380 \

--initial-cluster etcd-1=https://10.80.99.230:2380,etcd-2=https://10.80.99.231:2380,etcd-3=https://10.80.99.232:2380 \

--initial-cluster-token etcd-cluster \

--initial-cluster-state new \

--client-cert-auth \

--trusted-ca-file /ca/fssl/ca.pem \

--peer-trusted-ca-file /ca/fssl/ca.pem \

--cert-file /ca/etcd/etcd-ca.pem \

--key-file /ca/etcd/etcd-ca-key.pem \

--peer-cert-file /ca/etcd/etcd-ca.pem \

--peer-key-file /ca/etcd/etcd-ca-key.pem \

--peer-client-cert-auth

[Install]

WantedBy=multi-user.target

启动etcd服务

所有节点执行

#启动服务

sudo systemctl daemon-reload

sudo systemctl enable etcd.service

sudo systemctl start etcd.service

sudo systemctl cat etcd.service

#停止服务

sudo systemctl stop etcd.service

sudo systemctl disable etcd.service

#日志获取

sudo systemctl status etcd.service -l --no-pager

sudo journalctl -u etcd.service -l --no-pager|less

sudo journalctl -f -u etcd.service

集群健康状态

任意节点执行

ETCDCTL_API=3 /opt/etcd/bin/etcdctl \

--endpoints 10.80.99.230:2379,10.80.99.231:2379,10.80.99.232:2379 \

--cacert /ca/fssl/ca.pem \

--cert /ca/etcd/etcd-ca.pem \

--key /ca/etcd/etcd-ca-key.pem \

endpoint health

ETCDCTL_API=3 /opt/etcd/bin/etcdctl --cacert=/ca/fssl/ca.pem --cert=/ca/etcd/etcd-ca.pem --key=/ca/etcd/etcd-ca-key.pem --endpoints="https://10.80.99.230:2379,https://10.80.99.231:2379,https://10.80.99.232:2379" endpoint health --write-out=table

#输出如下内容

10.80.99.231:2379 is healthy: successfully committed proposal: took = 12.027532ms

10.80.99.230:2379 is healthy: successfully committed proposal: took = 43.509585ms

10.80.99.232:2379 is healthy: successfully committed proposal: took = 44.077138ms

+---------------------------+--------+-------------+-------+

| ENDPOINT | HEALTH | TOOK | ERROR |

+---------------------------+--------+-------------+-------+

| https://10.80.99.232:2379 | true | 8.701818ms | |

| https://10.80.99.230:2379 | true | 37.862599ms | |

| https://10.80.99.231:2379 | true | 38.497477ms | |

+---------------------------+--------+-------------+-------+

当前etcd已部署完成并成功启动,接下来开始部署各节点的组件。

部署Master节点组件

Master节点执行

创建api-server服务证书

mkdir -p /opt/kubernetes/{bin,conf,ssl,logs}

mkdir -p /opt/kubernetes/ssl/{kube-apiserver,kubectl,kube-controller-manager,kube-scheduler,kubelet,kube-proxy}

vim /ca/fssl/apiserver-ca-csr.json

#写入如下内容

{

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"O": "kubemsd",

"OU": "system",

"L": "Your_City",

"ST": "Your_State",

"C": "CN"

}

],

"CN": "kubernetes",

"hosts": [

"127.0.0.1",

"10.80.99.230",

"10.80.99.231",

"10.80.99.232",

"10.1.0.1",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

]

}

#生成api-server证书

cd /ca/fssl

cfssl gencert \

--ca ca.pem \

--ca-key ca-key.pem \

--config ca-config.json \

apiserver-ca-csr.json | cfssljson --bare /opt/kubernetes/ssl/kube-apiserver/kube-apiserver

#生成token

cat > /opt/kubernetes/conf/token.csv << EOF

$(head -c 16 /dev/urandom | od -An -t x | tr -d ' '),kubelet-bootstrap,10001,"system:kubelet-bootstrap"

EOF

cat /opt/kubernetes/conf/token.csv

创建api-server配置文件 /opt/kubernetes/conf/kube-apiserver.conf

KUBE_APISERVER_OPTS="--enable-admission-plugins=NamespaceLifecycle,NodeRestriction,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota \

--anonymous-auth=false \

--bind-address=10.80.99.230 \

--secure-port=6443 \

--advertise-address=10.80.99.230 \

--authorization-mode=Node,RBAC \

--runtime-config=api/all=true \

--enable-bootstrap-token-auth \

--service-cluster-ip-range=10.1.0.0/24 \

--token-auth-file=/opt/kubernetes/conf/token.csv \

--service-node-port-range=30000-32767 \

--tls-cert-file=/opt/kubernetes/ssl/kube-apiserver/kube-apiserver.pem \

--tls-private-key-file=/opt/kubernetes/ssl/kube-apiserver/kube-apiserver-key.pem \

--client-ca-file=/ca/fssl/ca.pem \

--kubelet-client-certificate=/opt/kubernetes/ssl/kube-apiserver/kube-apiserver.pem \

--kubelet-client-key=/opt/kubernetes/ssl/kube-apiserver/kube-apiserver-key.pem \

--service-account-key-file=/ca/fssl/ca-key.pem \

--service-account-signing-key-file=/ca/fssl/ca-key.pem \

--service-account-issuer=api \

--etcd-cafile=/ca/fssl/ca.pem \

--etcd-certfile=/ca/etcd/etcd-ca.pem \

--etcd-keyfile=/ca/etcd/etcd-ca-key.pem \

--etcd-servers=https://10.80.99.230:2379,https://10.80.99.231:2379,https://10.80.99.232:2379 \

--allow-privileged=true \

--apiserver-count=3 \

--audit-log-maxage=30 \

--audit-log-maxbackup=3 \

--audit-log-maxsize=100 \

--audit-log-path=/opt/kubernetes/logs/kube-apiserver-audit.log \

--event-ttl=1h \

--v=4"

k8s软件包清单

将软件包上传至Master节点/opt/install/k8s路径下

- kubernetes-server-linux-amd64.tar.gz

启动api-server服务

vim /etc/systemd/system/kube-apiserver.service

#写入如下内容

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

After=etcd.service

Wants=etcd.service

[Service]

EnvironmentFile=-/opt/kubernetes/conf/kube-apiserver.conf

ExecStart=/opt/kubernetes/bin/kube-apiserver $KUBE_APISERVER_OPTS

Restart=on-failure

RestartSec=5

Type=notify

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

#解压软件包

tar -zxvf kubernetes-server-linux-amd64.tar.gz

cd /opt/install/k8s/kubernetes/server/bin

cp kubectl kube-apiserver kube-controller-manager kube-scheduler kubelet kube-proxy /opt/kubernetes/bin/

#api-server自启、停服务

systemctl enable kube-apiserver.service

systemctl start kube-apiserver.service

systemctl status kube-apiserver.service

systemctl stop kube-apiserver.service

启动kubectl(操作k8s集群的命令行工具)

install -o root -g root -m 0755 kubectl /usr/local/bin/kubectl

kubectl version --client

#创建kubectl证书请求文件,让用户有权限调用api-server

vim /ca/fssl/admin-csr.json

#写入如下内容

{

"CN": "admin",

"hosts": [],

"key": {

"algo": "rsa",

"size":2048

},

"names":[

{

"C": "CN",

"L": "Your_City",

"ST": "Your_State",

"O": "system:masters",

"OU": "system"

}

]

}

#生成kubectl密钥及证书

cd /ca/fssl

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare /opt/kubernetes/ssl/kubectl/admin

kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://10.80.99.230:6443 --kubeconfig=/opt/kubernetes/ssl/kubectl/kube.config

cd /opt/kubernetes/ssl/kubectl

kubectl config set-credentials admin --client-certificate=admin.pem --client-key=admin-key.pem --embed-certs=true --kubeconfig=kube.config

kubectl config set-context kubernetes --cluster=kubernetes --user=admin --kubeconfig=kube.config

kubectl config use-context kubernetes --kubeconfig=kube.config

mkdir -p /root/.kube

cp kube.config /root/.kube/config

kubectl create clusterrolebinding kube-apiserver:kubelet-apis --clusterrole=system:kubelet-api-admin --user kubernetes --kubeconfig=/root/.kube/config

#验证

kubectl cluster-info

kubectl get cs

创建controller-manager服务证书

vim /ca/fssl/kube-controller-manager-csr.json

#写入如下内容

{

"CN": "system:kube-controller-manager",

"key": {

"algo": "rsa",

"size": 2048

},

"hosts": [

"127.0.0.1",

"192.168.28.30"

],

"names": [

{

"C": "CN",

"L": "Your_City",

"ST": "Your_State",

"O": "system:kube-controller-manager",

"OU": "system"

}

]

}

#生成证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-controller-manager-csr.json | cfssljson -bare /opt/kubernetes/ssl/kube-controller-manager/kube-controller-manager

#创建配置文件

kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://10.80.99.230:6443 --kubeconfig=/opt/kubernetes/ssl/kube-controller-manager/kube-controller-manager.kubeconfig

#添加证书信息

cd /opt/kubernetes/ssl/kube-controller-manager

kubectl config set-credentials system:kube-controller-manager --client-certificate=kube-controller-manager.pem --client-key=kube-controller-manager-key.pem --embed-certs=true --kubeconfig=kube-controller-manager.kubeconfig

kubectl config set-context system:kube-controller-manager --cluster=kubernetes --user=system:kube-controller-manager --kubeconfig=kube-controller-manager.kubeconfig

kubectl config use-context system:kube-controller-manager --kubeconfig=kube-controller-manager.kubeconfig

创建controller-manager服务启动配置

vim /opt/kubernetes/conf/kube-controller-manager.conf

#写入如下内容

KUBE_CONTROLLER_MANAGER_OPTS="--secure-port=10257 \

--bind-address=127.0.0.1 \

--kubeconfig=/opt/kubernetes/ssl/kube-controller-manager/kube-controller-manager.kubeconfig \

--service-cluster-ip-range=10.1.0.0/24 \

--cluster-name=kubernetes \

--cluster-signing-cert-file=/ca/fssl/ca.pem \

--cluster-signing-key-file=/ca/fssl/ca-key.pem \

--allocate-node-cidrs=true \

--cluster-cidr=10.2.0.0/24 \

--root-ca-file=/ca/fssl/ca.pem \

--service-account-private-key-file=/ca/fssl/ca-key.pem \

--leader-elect=true \

--feature-gates=RotateKubeletServerCertificate=true \

--controllers=*,bootstrapsigner,tokencleaner \

--horizontal-pod-autoscaler-sync-period=10s \

--tls-cert-file=/opt/kubernetes/ssl/kube-controller-manager/kube-controller-manager.pem \

--tls-private-key-file=/opt/kubernetes/ssl/kube-controller-manager/kube-controller-manager-key.pem \

--use-service-account-credentials=true \

--cluster-signing-duration=87600h0m0s \

--v=2"

启动controller-manager服务

vim /usr/lib/systemd/system/kube-controller-manager.service

#写入如下内容

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/opt/kubernetes/conf/kube-controller-manager.conf

ExecStart=/opt/kubernetes/bin/kube-controller-manager $KUBE_CONTROLLER_MANAGER_OPTS

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

#启动服务

systemctl enable kube-controller-manager.service

systemctl start kube-controller-manager.service

systemctl status kube-controller-manager.service

创建scheduler证书

cd /ca/fssl

vim /ca/fssl/kube-scheduler-csr.json

#写入如下内容

{

"CN": "system:kube-scheduler",

"hosts": [

"127.0.0.1",

"10.80.99.230"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Your_City",

"ST": "Your_State",

"O": "system:kube-scheduler",

"OU": "system"

}

]

}

#生成证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-scheduler-csr.json | cfssljson -bare /opt/kubernetes/ssl/kube-scheduler/kube-scheduler

#生成config文件

kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://10.80.99.230:6443 --kubeconfig=/opt/kubernetes/ssl/kube-scheduler/kube-scheduler.kubeconfig

cd /opt/kubernetes/ssl/kube-scheduler/

kubectl config set-credentials system:kube-scheduler --client-certificate=kube-scheduler.pem --client-key=kube-scheduler-key.pem --embed-certs=true --kubeconfig=kube-scheduler.kubeconfig

kubectl config set-context system:kube-scheduler --cluster=kubernetes --user=system:kube-scheduler --kubeconfig=kube-scheduler.kubeconfig

kubectl config use-context system:kube-scheduler --kubeconfig=kube-scheduler.kubeconfig

启动scheduler服务

vim /opt/kubernetes/conf/kube-scheduler.conf

#写入如下内容

KUBE_SCHEDULER_OPTS="--secure-port=10259 \

--leader-elect \

--kubeconfig=/opt/kubernetes/ssl/kube-scheduler/kube-scheduler.kubeconfig \

--bind-address=127.0.0.1 \

--v=2"

#启动配置

vim /usr/lib/systemd/system/kube-scheduler.service

#写入如下内容

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

After=kube-apiserver.service

Requires=kube-apiserver.service

[Service]

EnvironmentFile=/opt/kubernetes/conf/kube-scheduler.conf

ExecStart=/opt/kubernetes/bin/kube-scheduler $KUBE_SCHEDULER_OPTS

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

#启动服务

systemctl daemon-reload

systemctl enable --now kube-scheduler

systemctl status kube-scheduler

验证Master节点组件

Master节点组件全部部署完毕

#验证

kubectl get cs

#输出

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy ok

当前Master节点部署并成功启动,接下来部署工作Node节点

部署Node节点组件

Master节点执行

部署kubelet

#获取token

BOOTSTRAP_TOKEN=$(awk -F "," '{print $1}' /opt/kubernetes/conf/token.csv)

#创建kubelet证书目录

mkdir -p /opt/kubernetes/ssl/kubelet

#创建工作目录

mkdir -p /var/lib/kubelet

#创建证书

cd /ca/fssl

kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://10.80.99.230:6443 --kubeconfig=/opt/kubernetes/ssl/kubelet/kubelet-bootstrap.kubeconfig

kubectl config set-credentials kubelet-bootstrap --token=${BOOTSTRAP_TOKEN} --kubeconfig=/opt/kubernetes/ssl/kubelet/kubelet-bootstrap.kubeconfig

cd /opt/kubernetes/ssl/kubelet/

kubectl config set-context default --cluster=kubernetes --user=kubelet-bootstrap --kubeconfig=/opt/kubernetes/ssl/kubelet/kubelet-bootstrap.kubeconfig

kubectl config use-context default --kubeconfig=/opt/kubernetes/ssl/kubelet/kubelet-bootstrap.kubeconfig

kubectl create clusterrolebinding cluster-system-anonymous --clusterrole=cluster-admin --user=kubelet-bootstrap

kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap --kubeconfig=/opt/kubernetes/ssl/kubelet/kubelet-bootstrap.kubeconfig

#验证

kubectl describe clusterrolebinding cluster-system-anonymous

kubectl describe clusterrolebinding kubelet-bootstrap

#创建配置文件

vim /opt/kubernetes/conf/kubelet.json

#写入如下内容

{

"kind": "KubeletConfiguration",

"apiVersion": "kubelet.config.k8s.io/v1beta1",

"authentication": {

"x509": {

"clientCAFile": "/ca/fssl/ca.pem"

},

"webhook": {

"enabled": true,

"cacheTTL": "2m0s"

},

"anonymous": {

"enabled": false

}

},

"authorization": {

"mode": "Webhook",

"webhook": {

"cacheAuthorizedTTL": "5m0s",

"cacheUnauthorizedTTL": "30s"

}

},

"address": "10.80.99.230",

"port": 10250,

"readOnlyPort": 10255,

"cgroupDriver": "systemd",

"hairpinMode": "promiscuous-bridge",

"serializeImagePulls": false,

"clusterDomain": "cluster.local.",

"clusterDNS": ["10.1.0.2"]

}

#创建启动服务

vim /usr/lib/systemd/system/kubelet.service

#写入如下内容

[Unit]

Description=Kubernetes Kubelet

Documentation=https://github.com/kubernetes/kubernetes

After=containerd.service

Requires=containerd.service

[Service]

WorkingDirectory=/var/lib/kubelet

ExecStart=/opt/kubernetes/bin/kubelet \

--bootstrap-kubeconfig=/opt/kubernetes/ssl/kubelet/kubelet-bootstrap.kubeconfig \

--cert-dir=/opt/kubernetes/ssl/kubelet \

--kubeconfig=/opt/kubernetes/ssl/kubelet/kubelet.kubeconfig \

--config=/opt/kubernetes/conf/kubelet.json \

--container-runtime-endpoint=unix:///run/containerd/containerd.sock \

--rotate-certificates \

--pod-infra-container-image=your_harbor_url/google_containers/pause:3.9 \

--root-dir=/etc/cni/net.d \

--v=2

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

Node节点执行,创建文件

mkdir -p /opt/kubernetes/{bin,conf,ssl,logs}

mkdir -p /opt/kubernetes/ssl/{kubelet,kube-proxy}

mkdir -p /var/lib/kubelet

Master节点执行,分发服务

cd /opt/kubernetes/bin

scp kubelet kube-proxy root@k8s-node1:/opt/kubernetes/bin/

scp kubelet kube-proxy root@k8s-node2:/opt/kubernetes/bin/

cd /opt/kubernetes/ssl/kubelet

scp kubelet-bootstrap.kubeconfig root@k8s-node1:/opt/kubernetes/ssl/kubelet/

scp kubelet-bootstrap.kubeconfig root@k8s-node2:/opt/kubernetes/ssl/kubelet/

scp /opt/kubernetes/conf/kubelet.json root@k8s-node1:/opt/kubernetes/conf

scp /opt/kubernetes/conf/kubelet.json root@k8s-node2:/opt/kubernetes/conf

所有Node节点执行

sed -i 's/10.80.99.230/10.80.99.231/g' /opt/kubernetes/conf/kubelet.json

所有节点执行启动服务

systemctl daemon-reload

systemctl enable --now kubelet

systemctl status kubelet

#验证集群节点

kubectl get nodes

#输出

NAME STATUS ROLES AGE VERSION

k8s-master NotReady <none> 7d20h v1.30.2

k8s-node1 NotReady <none> 7d20h v1.30.2

k8s-node2 NotReady <none> 7d20h v1.30.2

当前k8s所有节点都部署完成并加入了集群。但是上面输出的状态是NotReady而不是Ready,是因为k8s的网络组件还没部署完成,需要网络组件k8s集群才能真正使用,当前状态下启动容器也是准备状态,下面开始部署k8s网络组件和相关网络组件介绍

k8s网络组件

(未完,后续补充)

![%title缩略图 Read more about the article [开发环境分享]-各种开发环境分享(持续跟新….)](/wp-content/uploads/2020/05/huanj-300x169.jpg)